User Documentation¶

Important

DataBroker release 1.0 includes support for old-style “v1” usage and new-style “v2” usage. This section addresses databroker’s new “v2” usage. It is still under development and subject to change in response to user feedback.

For the stable usage “v1” usage, see Version 1 Interface. See Transition Plan for more information.

Walkthrough¶

Find a Catalog¶

When databroker is first imported, it searches for Catalogs on your system, typically provided by a Python package or configuration file that you or an administrator installed.

In [1]: from databroker import catalog

In [2]: list(catalog)

Out[2]: ['csx', 'chx', 'isr', 'xpd', 'sst', 'bmm', 'lix']

Each entry is a Catalog that databroker discovered on our system. In this example, we find Catalogs corresponding to different instruments/beamlines. We can access a subcatalog with square brackets, like accessing an item in a dictionary.

In [3]: catalog['csx']

Out[3]: csx:

args:

path: source/_catalogs/csx.yml

description: ''

driver: intake.catalog.local.YAMLFileCatalog

metadata: {}

List the entries in the ‘csx’ Catalog.

In [4]: list(catalog['csx'])

Out[4]: ['raw']

We see Catalogs for raw data and processed data. Let’s access the raw one and assign it to a variable for convenience.

In [5]: raw = catalog['csx']['raw']

This Catalog contains all the raw data taken at CSX. It contains many entries,

as we can see by checking len(raw) so listing it would take awhile.

Instead, we’ll look up entries by name or by search.

Note

As an alternative to list(...), try using tab-completion to view your

options. Typing catalog[' and then hitting the TAB key will list the

available entries.

Also, these shortcuts can save a little typing.

# These three lines are equivalent.

catalog['csx']['raw']

catalog['csx', 'raw']

catalog.csx.raw # only works if the entry names are valid Python identifiers

Look up a Run by ID¶

Suppose you know the unique ID of a run (a.k.a “scan”) that we want to access. Note that the first several characters will do; usually 6-8 are enough to uniquely identify a given run.

In [6]: run = raw[uid] # where uid is some string like '17531ace'

Each run also has a scan_id. The scan_id is usually easier to remember

(it’s a counting number, not a random string) but it may not be globally

unique. If there are collisions, you’ll get the most recent match, so the

unique ID is better as a long-term reference.

In [7]: run = raw[1]

Search for Runs¶

Suppose you want to sift through multiple runs to examine a range of datasets.

In [8]: query = {'proposal_id': 12345} # or, equivalently, dict(proposal_id=12345)

In [9]: search_results = raw.search(query)

The result, search_results, is itself a Catalog.

In [10]: search_results

Out[10]: search results:

args:

auth: null

getenv: true

getshell: true

handler_registry:

NPY_SEQ: !!python/name:ophyd.sim.NumpySeqHandler ''

name: search results

paths:

- data/*.jsonl

query:

proposal_id: 12345

root_map: {}

storage_options: null

transforms:

descriptor: &id001 !!python/name:databroker.core._no_op ''

resource: *id001

start: *id001

stop: *id001

description: ''

driver: databroker._drivers.jsonl.BlueskyJSONLCatalog

metadata:

catalog_dir: /home/travis/build/bluesky/databroker/doc/source/_catalogs/

We can quickly check how many results it contains

In [11]: len(search_results)

Out[11]: 5

and, if we want, list them.

In [12]: list(search_results)

Out[12]:

['a2131d67-2ac9-4bc0-9b16-bfc38af99329',

'15214ea1-32bd-4195-8b02-c9cf1701f699',

'0aae2471-f0a6-40ff-8b15-a4b8d55250fd',

'2785d395-e6f6-46b0-be9e-4e8d7d0eee75',

'e38f6b4b-bc44-48e3-acbc-2be7c07d58d5']

Because searching on a Catalog returns another Catalog, we refine our search

by searching search_results. In this example we’ll use a helper,

TimeRange, to build our query.

In [13]: from databroker.queries import TimeRange

In [14]: query = TimeRange(since='2019-09-01', until='2040')

In [15]: search_results.search(query)

Out[15]: search results:

args:

auth: null

getenv: true

getshell: true

handler_registry:

NPY_SEQ: !!python/name:ophyd.sim.NumpySeqHandler ''

name: search results

paths:

- data/*.jsonl

query:

$and:

- proposal_id: 12345

- time:

$gte: 1567310400.0

$lt: 2209006800.0

root_map: {}

storage_options: null

transforms:

descriptor: &id001 !!python/name:databroker.core._no_op ''

resource: *id001

start: *id001

stop: *id001

description: ''

driver: databroker._drivers.jsonl.BlueskyJSONLCatalog

metadata:

catalog_dir: /home/travis/build/bluesky/databroker/doc/source/_catalogs/

Other sophisticated queries are possible, such as filtering for scans that include greater than 50 points.

search_results.search({'num_points': {'$gt': 50}})

See MongoQuerySelectors for more.

Once we have a result catalog that we are happy with we can list the entries

via list(search_results), access them individually by names as in

search_results[SOME_UID] or loop through them:

In [16]: for uid, run in search_results.items():

....: ...

....:

Access Data¶

Suppose we have a run of interest.

In [17]: run = raw[uid]

A given run contains multiple logical tables. The number of these tables and their names varies by the particular experiment, but two common ones are

‘primary’, the main data of interest, such as a time series of images

‘baseline’, readings taken at the beginning and end of the run for alignment and sanity-check purposes

To explore a run, we can open its entry by calling it like a function with no arguments:

In [18]: run() # or, equivalently, run.get()

Out[18]: e38f6b4b-bc44-48e3-acbc-2be7c07d58d5:

args:

entry: !!python/object:databroker.core.Entry

args: []

cls: databroker.core.Entry

kwargs:

name: e38f6b4b-bc44-48e3-acbc-2be7c07d58d5

description: {}

driver: databroker.core.BlueskyRunFromGenerator

direct_access: forbid

args:

gen_args: !!python/tuple

- data/e38f6b4b-bc44-48e3-acbc-2be7c07d58d5.jsonl

gen_func: &id003 !!python/name:databroker._drivers.jsonl.gen ''

gen_kwargs: {}

get_filler: &id004 !!python/object/apply:functools.partial

args:

- &id001 !!python/name:event_model.Filler ''

state: !!python/tuple

- *id001

- !!python/tuple []

- handler_registry: !!python/object:event_model.HandlerRegistryView

_handler_registry:

NPY_SEQ: !!python/name:ophyd.sim.NumpySeqHandler ''

inplace: false

root_map: {}

- null

transforms:

descriptor: &id002 !!python/name:databroker.core._no_op ''

resource: *id002

start: *id002

stop: *id002

cache: null

parameters: []

metadata:

start:

detectors:

- img

hints:

dimensions:

- - - motor

- primary

motors:

- motor

num_intervals: 2

num_points: 3

plan_args:

args:

- SynAxis(prefix='', name='motor', read_attrs=['readback', 'setpoint'],

configuration_attrs=['velocity', 'acceleration'])

- -1

- 1

detectors:

- "SynSignalWithRegistry(name='img', value=array([[1., 1., 1., 1., 1.,\

\ 1., 1., 1., 1., 1.],\n [1., 1., 1., 1., 1., 1., 1., 1., 1.,\

\ 1.],\n [1., 1., 1., 1., 1., 1., 1., 1., 1., 1.],\n [1.,\

\ 1., 1., 1., 1., 1., 1., 1., 1., 1.],\n [1., 1., 1., 1., 1.,\

\ 1., 1., 1., 1., 1.],\n [1., 1., 1., 1., 1., 1., 1., 1., 1.,\

\ 1.],\n [1., 1., 1., 1., 1., 1., 1., 1., 1., 1.],\n [1.,\

\ 1., 1., 1., 1., 1., 1., 1., 1., 1.],\n [1., 1., 1., 1., 1.,\

\ 1., 1., 1., 1., 1.],\n [1., 1., 1., 1., 1., 1., 1., 1., 1.,\

\ 1.]]), timestamp=1604527923.2121077)"

num: 3

per_step: None

plan_name: scan

plan_pattern: inner_product

plan_pattern_args:

args:

- SynAxis(prefix='', name='motor', read_attrs=['readback', 'setpoint'],

configuration_attrs=['velocity', 'acceleration'])

- -1

- 1

num: 3

plan_pattern_module: bluesky.plan_patterns

plan_type: generator

proposal_id: 12345

scan_id: 2

time: 1604527923.2195344

uid: e38f6b4b-bc44-48e3-acbc-2be7c07d58d5

versions:

bluesky: 1.6.7

ophyd: 1.5.4

stop:

exit_status: success

num_events:

baseline: 2

primary: 3

reason: ''

run_start: e38f6b4b-bc44-48e3-acbc-2be7c07d58d5

time: 1604527923.2358866

uid: 6b489ee3-cfeb-45a3-bd56-d42d691990cb

catalog_dir: null

getenv: true

getshell: true

catalog:

cls: databroker._drivers.jsonl.BlueskyJSONLCatalog

args: []

kwargs:

metadata:

catalog_dir: /home/travis/build/bluesky/databroker/doc/source/_catalogs/

paths: data/*.jsonl

handler_registry:

NPY_SEQ: ophyd.sim.NumpySeqHandler

name: raw

gen_args: !!python/tuple

- data/e38f6b4b-bc44-48e3-acbc-2be7c07d58d5.jsonl

gen_func: *id003

gen_kwargs: {}

get_filler: *id004

transforms:

descriptor: *id002

resource: *id002

start: *id002

stop: *id002

description: ''

driver: databroker.core.BlueskyRunFromGenerator

metadata:

catalog_dir: null

start: !!python/object/new:databroker.core.Start

dictitems:

detectors: &id005

- img

hints: &id006

dimensions:

- - - motor

- primary

motors: &id007

- motor

num_intervals: 2

num_points: 3

plan_args: &id008

args:

- SynAxis(prefix='', name='motor', read_attrs=['readback', 'setpoint'],

configuration_attrs=['velocity', 'acceleration'])

- -1

- 1

detectors:

- "SynSignalWithRegistry(name='img', value=array([[1., 1., 1., 1., 1., 1.,\

\ 1., 1., 1., 1.],\n [1., 1., 1., 1., 1., 1., 1., 1., 1., 1.],\n\

\ [1., 1., 1., 1., 1., 1., 1., 1., 1., 1.],\n [1., 1., 1.,\

\ 1., 1., 1., 1., 1., 1., 1.],\n [1., 1., 1., 1., 1., 1., 1., 1.,\

\ 1., 1.],\n [1., 1., 1., 1., 1., 1., 1., 1., 1., 1.],\n [1.,\

\ 1., 1., 1., 1., 1., 1., 1., 1., 1.],\n [1., 1., 1., 1., 1., 1.,\

\ 1., 1., 1., 1.],\n [1., 1., 1., 1., 1., 1., 1., 1., 1., 1.],\n\

\ [1., 1., 1., 1., 1., 1., 1., 1., 1., 1.]]), timestamp=1604527923.2121077)"

num: 3

per_step: None

plan_name: scan

plan_pattern: inner_product

plan_pattern_args: &id009

args:

- SynAxis(prefix='', name='motor', read_attrs=['readback', 'setpoint'],

configuration_attrs=['velocity', 'acceleration'])

- -1

- 1

num: 3

plan_pattern_module: bluesky.plan_patterns

plan_type: generator

proposal_id: 12345

scan_id: 2

time: 1604527923.2195344

uid: e38f6b4b-bc44-48e3-acbc-2be7c07d58d5

versions: &id010

bluesky: 1.6.7

ophyd: 1.5.4

state:

detectors: *id005

hints: *id006

motors: *id007

num_intervals: 2

num_points: 3

plan_args: *id008

plan_name: scan

plan_pattern: inner_product

plan_pattern_args: *id009

plan_pattern_module: bluesky.plan_patterns

plan_type: generator

proposal_id: 12345

scan_id: 2

time: 1604527923.2195344

uid: e38f6b4b-bc44-48e3-acbc-2be7c07d58d5

versions: *id010

stop: !!python/object/new:databroker.core.Stop

dictitems:

exit_status: success

num_events: &id011

baseline: 2

primary: 3

reason: ''

run_start: e38f6b4b-bc44-48e3-acbc-2be7c07d58d5

time: 1604527923.2358866

uid: 6b489ee3-cfeb-45a3-bd56-d42d691990cb

state:

exit_status: success

num_events: *id011

reason: ''

run_start: e38f6b4b-bc44-48e3-acbc-2be7c07d58d5

time: 1604527923.2358866

uid: 6b489ee3-cfeb-45a3-bd56-d42d691990cb

We can also use tab-completion, as in entry[' TAB, to see the contents.

That is, the Run is yet another Catalog, and its contents are the logical

tables of data. Finally, let’s get one of these tables.

In [19]: ds = run.primary.read()

In [20]: ds

Out[20]:

<xarray.Dataset>

Dimensions: (dim_0: 10, dim_1: 10, time: 3)

Coordinates:

* time (time) float64 1.605e+09 1.605e+09 1.605e+09

Dimensions without coordinates: dim_0, dim_1

Data variables:

motor (time) float64 -1.0 0.0 1.0

motor_setpoint (time) float64 -1.0 0.0 1.0

img (time, dim_0, dim_1) float64 1.0 1.0 1.0 1.0 ... 1.0 1.0 1.0

This is an xarray.Dataset. You can access specific columns

In [21]: ds['img']

Out[21]:

<xarray.DataArray 'img' (time: 3, dim_0: 10, dim_1: 10)>

array([[[1., 1., 1., 1., 1., 1., 1., 1., 1., 1.],

[1., 1., 1., 1., 1., 1., 1., 1., 1., 1.],

[1., 1., 1., 1., 1., 1., 1., 1., 1., 1.],

[1., 1., 1., 1., 1., 1., 1., 1., 1., 1.],

[1., 1., 1., 1., 1., 1., 1., 1., 1., 1.],

[1., 1., 1., 1., 1., 1., 1., 1., 1., 1.],

[1., 1., 1., 1., 1., 1., 1., 1., 1., 1.],

[1., 1., 1., 1., 1., 1., 1., 1., 1., 1.],

[1., 1., 1., 1., 1., 1., 1., 1., 1., 1.],

[1., 1., 1., 1., 1., 1., 1., 1., 1., 1.]],

[[1., 1., 1., 1., 1., 1., 1., 1., 1., 1.],

[1., 1., 1., 1., 1., 1., 1., 1., 1., 1.],

[1., 1., 1., 1., 1., 1., 1., 1., 1., 1.],

[1., 1., 1., 1., 1., 1., 1., 1., 1., 1.],

[1., 1., 1., 1., 1., 1., 1., 1., 1., 1.],

[1., 1., 1., 1., 1., 1., 1., 1., 1., 1.],

[1., 1., 1., 1., 1., 1., 1., 1., 1., 1.],

[1., 1., 1., 1., 1., 1., 1., 1., 1., 1.],

[1., 1., 1., 1., 1., 1., 1., 1., 1., 1.],

[1., 1., 1., 1., 1., 1., 1., 1., 1., 1.]],

[[1., 1., 1., 1., 1., 1., 1., 1., 1., 1.],

[1., 1., 1., 1., 1., 1., 1., 1., 1., 1.],

[1., 1., 1., 1., 1., 1., 1., 1., 1., 1.],

[1., 1., 1., 1., 1., 1., 1., 1., 1., 1.],

[1., 1., 1., 1., 1., 1., 1., 1., 1., 1.],

[1., 1., 1., 1., 1., 1., 1., 1., 1., 1.],

[1., 1., 1., 1., 1., 1., 1., 1., 1., 1.],

[1., 1., 1., 1., 1., 1., 1., 1., 1., 1.],

[1., 1., 1., 1., 1., 1., 1., 1., 1., 1.],

[1., 1., 1., 1., 1., 1., 1., 1., 1., 1.]]])

Coordinates:

* time (time) float64 1.605e+09 1.605e+09 1.605e+09

Dimensions without coordinates: dim_0, dim_1

Attributes:

object: img

do mathematical operations

In [22]: ds.mean()

Out[22]:

<xarray.Dataset>

Dimensions: ()

Data variables:

motor float64 0.0

motor_setpoint float64 0.0

img float64 1.0

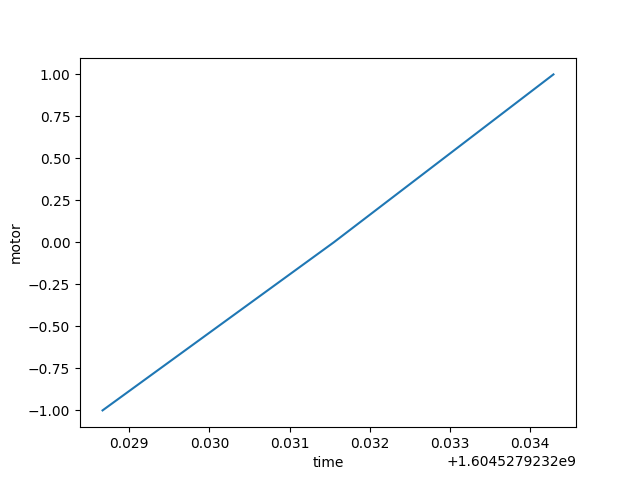

make quick plots

In [23]: ds['motor'].plot()

Out[23]: [<matplotlib.lines.Line2D at 0x7fe8bc1878d0>]

and much more. See the documentation on xarray.

If the data is large, it can be convenient to access it lazily, deferring the

actual loading network or disk I/O. To do this, replace read() with

to_dask(). You still get back an xarray.Dataset, but it contains

placeholders that will fetch the data in chunks and only as needed, rather than

greedily pulling all the data into memory from the start.

In [24]: ds = run.primary.to_dask()

In [25]: ds

Out[25]:

<xarray.Dataset>

Dimensions: (dim_0: 10, dim_1: 10, time: 3)

Coordinates:

* time (time) float64 1.605e+09 1.605e+09 1.605e+09

Dimensions without coordinates: dim_0, dim_1

Data variables:

motor (time) float64 -1.0 0.0 1.0

motor_setpoint (time) float64 -1.0 0.0 1.0

img (time, dim_0, dim_1) float64 1.0 1.0 1.0 1.0 ... 1.0 1.0 1.0

See the documentation on dask.

TODO: This is displaying numpy arrays, not dask. Illustrating dask here might require standing up a server.

Explore Metadata¶

Everything recorded at the start of the run is in run.metadata['start'].

In [26]: run.metadata['start']

Out[26]:

Start({'detectors': ['img'],

'hints': {'dimensions': [[['motor'], 'primary']]},

'motors': ['motor'],

'num_intervals': 2,

'num_points': 3,

'plan_args': {'args': ["SynAxis(prefix='', name='motor', "

"read_attrs=['readback', 'setpoint'], "

"configuration_attrs=['velocity', 'acceleration'])",

-1,

1],

'detectors': ["SynSignalWithRegistry(name='img', "

'value=array([[1., 1., 1., 1., 1., 1., 1., 1., '

'1., 1.],\n'

' [1., 1., 1., 1., 1., 1., 1., 1., 1., '

'1.],\n'

' [1., 1., 1., 1., 1., 1., 1., 1., 1., '

'1.],\n'

' [1., 1., 1., 1., 1., 1., 1., 1., 1., '

'1.],\n'

' [1., 1., 1., 1., 1., 1., 1., 1., 1., '

'1.],\n'

' [1., 1., 1., 1., 1., 1., 1., 1., 1., '

'1.],\n'

' [1., 1., 1., 1., 1., 1., 1., 1., 1., '

'1.],\n'

' [1., 1., 1., 1., 1., 1., 1., 1., 1., '

'1.],\n'

' [1., 1., 1., 1., 1., 1., 1., 1., 1., '

'1.],\n'

' [1., 1., 1., 1., 1., 1., 1., 1., 1., '

'1.]]), timestamp=1604527923.2121077)'],

'num': 3,

'per_step': 'None'},

'plan_name': 'scan',

'plan_pattern': 'inner_product',

'plan_pattern_args': {'args': ["SynAxis(prefix='', name='motor', "

"read_attrs=['readback', 'setpoint'], "

"configuration_attrs=['velocity', "

"'acceleration'])",

-1,

1],

'num': 3},

'plan_pattern_module': 'bluesky.plan_patterns',

'plan_type': 'generator',

'proposal_id': 12345,

'scan_id': 2,

'time': 1604527923.2195344,

'uid': 'e38f6b4b-bc44-48e3-acbc-2be7c07d58d5',

'versions': {'bluesky': '1.6.7', 'ophyd': '1.5.4'}})

Information only knowable at the end, like the exit status (success, abort,

fail) is stored in run.metadata['stop'].

In [27]: run.metadata['stop']

Out[27]:

Stop({'exit_status': 'success',

'num_events': {'baseline': 2, 'primary': 3},

'reason': '',

'run_start': 'e38f6b4b-bc44-48e3-acbc-2be7c07d58d5',

'time': 1604527923.2358866,

'uid': '6b489ee3-cfeb-45a3-bd56-d42d691990cb'})

The v1 API stored metadata about devices involved and their configuration,

accessed using descriptors, this is roughly equivalent to what is available

in primary.metadata. It is quite large,

In [28]: run.primary.metadata

Out[28]:

{'start': {'uid': 'e38f6b4b-bc44-48e3-acbc-2be7c07d58d5',

'time': 1604527923.2195344,

'versions': {'ophyd': '1.5.4', 'bluesky': '1.6.7'},

'proposal_id': 12345,

'scan_id': 2,

'plan_type': 'generator',

'plan_name': 'scan',

'detectors': ['img'],

'motors': ['motor'],

'num_points': 3,

'num_intervals': 2,

'plan_args': {'detectors': ["SynSignalWithRegistry(name='img', value=array([[1., 1., 1., 1., 1., 1., 1., 1., 1., 1.],\n [1., 1., 1., 1., 1., 1., 1., 1., 1., 1.],\n [1., 1., 1., 1., 1., 1., 1., 1., 1., 1.],\n [1., 1., 1., 1., 1., 1., 1., 1., 1., 1.],\n [1., 1., 1., 1., 1., 1., 1., 1., 1., 1.],\n [1., 1., 1., 1., 1., 1., 1., 1., 1., 1.],\n [1., 1., 1., 1., 1., 1., 1., 1., 1., 1.],\n [1., 1., 1., 1., 1., 1., 1., 1., 1., 1.],\n [1., 1., 1., 1., 1., 1., 1., 1., 1., 1.],\n [1., 1., 1., 1., 1., 1., 1., 1., 1., 1.]]), timestamp=1604527923.2121077)"],

'num': 3,

'args': ["SynAxis(prefix='', name='motor', read_attrs=['readback', 'setpoint'], configuration_attrs=['velocity', 'acceleration'])",

-1,

1],

'per_step': 'None'},

'hints': {'dimensions': [[['motor'], 'primary']]},

'plan_pattern': 'inner_product',

'plan_pattern_module': 'bluesky.plan_patterns',

'plan_pattern_args': {'num': 3,

'args': ["SynAxis(prefix='', name='motor', read_attrs=['readback', 'setpoint'], configuration_attrs=['velocity', 'acceleration'])",

-1,

1]}},

'stop': {'run_start': 'e38f6b4b-bc44-48e3-acbc-2be7c07d58d5',

'time': 1604527923.2358866,

'uid': '6b489ee3-cfeb-45a3-bd56-d42d691990cb',

'exit_status': 'success',

'reason': '',

'num_events': {'baseline': 2, 'primary': 3}},

'descriptors': [Descriptor({'configuration': {'img': {'data': {'img': '6c99e87b-7352-4aa6-a72a-99fb457bb73a/0'},

'data_keys': {'img': {'dtype': 'array',

'external': 'FILESTORE',

'precision': 3,

'shape': [10, 10],

'source': 'SIM:img'}},

'timestamps': {'img': 1604527923.225212}},

'motor': {'data': {'motor_acceleration': 1,

'motor_velocity': 1},

'data_keys': {'motor_acceleration': {'dtype': 'integer',

'shape': [],

'source': 'SIM:motor_acceleration'},

'motor_velocity': {'dtype': 'integer',

'shape': [],

'source': 'SIM:motor_velocity'}},

'timestamps': {'motor_acceleration': 1604527918.8515399,

'motor_velocity': 1604527918.8514783}}},

'data_keys': {'img': {'dtype': 'array',

'external': 'FILESTORE',

'object_name': 'img',

'precision': 3,

'shape': [10, 10],

'source': 'SIM:img'},

'motor': {'dtype': 'number',

'object_name': 'motor',

'precision': 3,

'shape': [],

'source': 'SIM:motor'},

'motor_setpoint': {'dtype': 'number',

'object_name': 'motor',

'precision': 3,

'shape': [],

'source': 'SIM:motor_setpoint'}},

'hints': {'img': {'fields': []}, 'motor': {'fields': ['motor']}},

'name': 'primary',

'object_keys': {'img': ['img'], 'motor': ['motor', 'motor_setpoint']},

'run_start': 'e38f6b4b-bc44-48e3-acbc-2be7c07d58d5',

'time': 1604527923.2271156,

'uid': '6a3cd731-6a10-47a5-86cb-07211a82d885'})],

'dims': {'dim_0': 10, 'dim_1': 10, 'time': 3},

'data_vars': {'motor': ['time'], 'motor_setpoint': ['time'], 'img': ['time']},

'coords': ('time',)}

It is a little flatter with a different layout than was returned by the v1 API.

Replay Document Stream¶

Bluesky is built around a streaming-friendly representation of data and

metadata. (See event-model.) To access the run—effectively replaying the

chronological stream of documents that were emitted during data

acquisition—use the documents() method.

Changed in version 1.2.0: The documents method was formerly named canonical. The old name is

still supported but deprecated.

In [29]: run.documents(fill='yes')

Out[29]: <generator object BlueskyRun.documents at 0x7fe8bc0c9750>

This generator yields (name, doc) pairs and can be fed into streaming

visualization, processing, and serialization tools that consume this

representation, such as those provided by bluesky.

The keyword argument fill is required. Its allowed values are 'yes'

(numpy arrays)`, 'no' (Datum IDs), and 'delayed' (dask arrays, still

under development).